Java as a host language for a D

Quote from Dave Voorhis on April 24, 2020, 3:26 pmQuote from dandl on April 24, 2020, 1:30 amI understand the philosophy, but it seems to me that if you generate tuple classes with enough internal machinery, implementing the RA is trivial.

I agree, I don't use Join a lot in LINQ, but I do use Select heavily and Union/Minus quite a bit, and they needs more or less the same machinery. Isn't that much what you're doing?

Yes, and I don't doubt that adding a few mechanisms to make it more RA-like -- like an explicit JOIN, INTERSECT, MINUS, etc. -- will be useful and I intend to add them later. My initial goal is to be able to easily use Java Streams on SQL, CSV, you-name-it sources.

I'd be interested what your tuple classes look like, if you expect to be able to add RA features later.

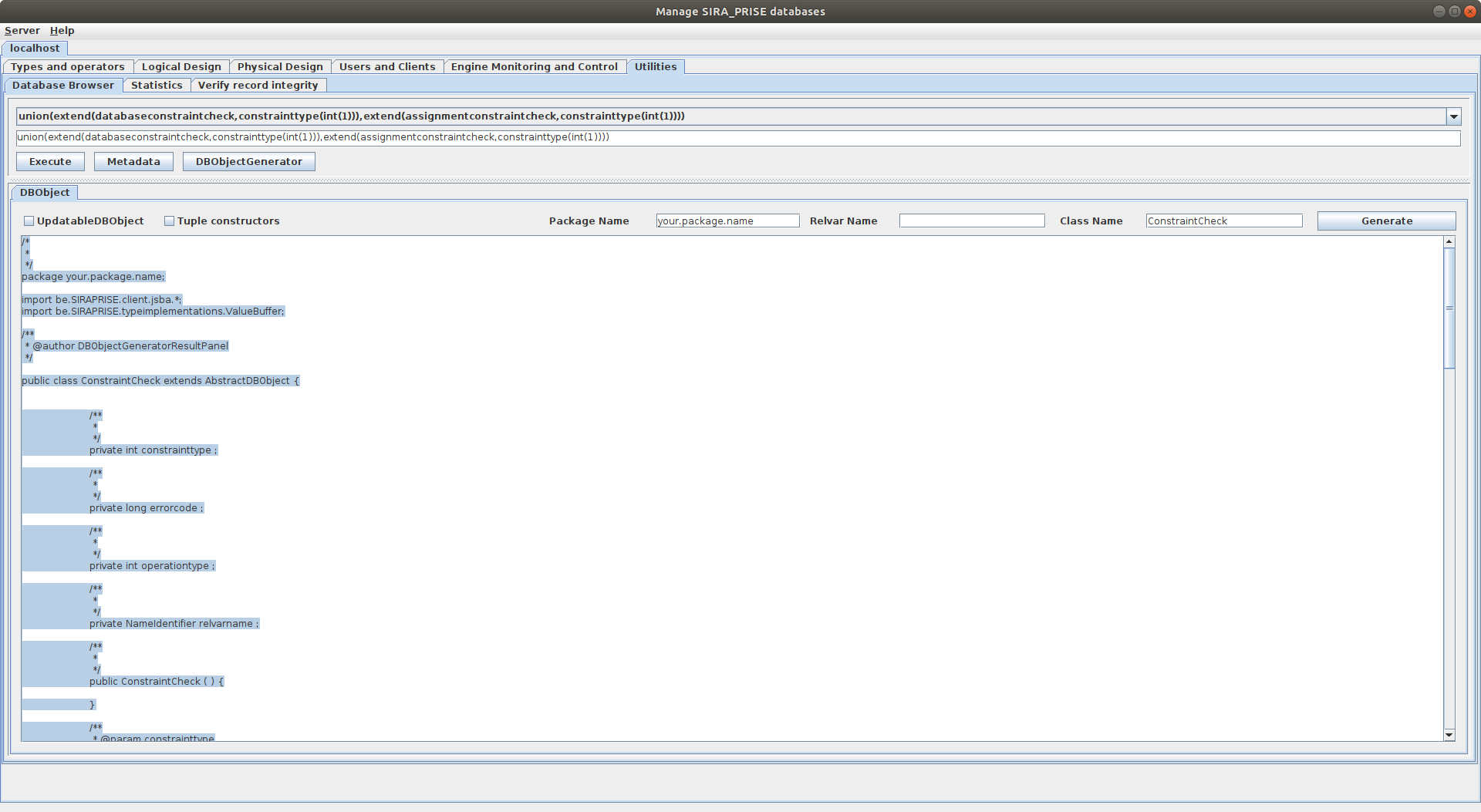

Here's the tuple class generated by my Stage 1 example:

/** TestSelect tuple class version 0 */ public class TestSelect extends Tuple { /** Version number */ public static final long serialVersionUID = 0; /** Field */ public java.lang.Integer x; /** Field */ public java.lang.Integer y; /** Create string representation of this tuple. */ public String toString() { return String.format("TestSelect {x = %s, y = %s}", this.x, this.y); } }Ideally, it should have hashCode() and equals() definitions, but news of the new Java record implementation came along at the point where I was about to add them. I intend to use Java records, it will actually wind up being even simpler.

I don't really get it. Step 1 seems to infer attribute name and type from the result set of an SQL query. That won't work for CSV files, where you need type hints. And it doesn't address the issue of user-defined types in the database. Do you have user-defined value types?

Yes, it infers attribute name and type from the result of an SQL query, and it does it without any effort on my part because it uses JDBC to do it. JDBC maps database types to Java types. User-defined types are limited to whatever JDBC normally does with them.

CSV files have no type specification so I treat them as columns of strings. Converting the strings into something else is up to the user.

Step 2 bundles together a number of these to generate code, presumably a complete class definition for each tuple type, which I assume you

importinto the application.Yes.

Presumably in Step 3

databaseassumes a standard factory method by which it can create a tuple type instance, given just the class name?In Stage 3, it creates a tuple type instance given the tuple type class. In my example code, the tuple type class is TestSelect, so a new tuple instance is created like this:

var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();The actual code that does the heavy lifting to convert a JDBC query resultset to form that can be converted to a stream of tuples is a bit more complex. It's this:

/** * FunctionalInterface to define lambdas for processing each Tuple in a ResultSet. */ @FunctionalInterface public interface TupleProcessor<T extends Tuple> { public void process(T tupleType); } /** * Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing. * * @param resultSet * @param tupleType * @param tupleProcessor * * @throws SecurityException - thrown if tuple constructor is not accessible * @throws NoSuchMethodException - thrown if tuple constructor doesn't exist * @throws InvocationTargetException - thrown if unable to instantiate tuple class * @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument * @throws IllegalAccessException - thrown if unable to instantiate tuple class * @throws InstantiationException - thrown if unable to instantiate tuple class * @throws SQLException - thrown if accessing ResultSet fails * @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple */ public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException { if (resultSet == null) throw new IllegalArgumentException("resultSet may not be null"); if (tupleType == null) throw new IllegalArgumentException("tupleType may not be null"); if (tupleProcessor == null) throw new IllegalArgumentException("tupleProcessor may not be null"); var tupleConstructor = tupleType.getConstructor((Class<?>[])null); var metadata = resultSet.getMetaData(); boolean optimised = false; Field[] fields = null; while (resultSet.next()) { var tuple = tupleConstructor.newInstance((Object[])null); if (optimised) { for (int column = 1; column <= metadata.getColumnCount(); column++) { var value = resultSet.getObject(column); fields[column].set(tuple, value); } } else { int columnCount = metadata.getColumnCount(); fields = new Field[columnCount + 1]; for (int column = 1; column <= columnCount; column++) { var name = metadata.getColumnName(column); var value = resultSet.getObject(column); var field = tuple.getClass().getField(name); field.set(tuple, value); fields[column] = field; } optimised = true; } tupleProcessor.process(tuple); } }I can read the Java code OK, but I can't easily infer the connections between the parts or the internal design decisions from the information given. And I can't see whether you can

?

The core questions seem to be:

- What are the key features of

- user-defined value types

- generated tuple types

- generated relation types (if you have them)?

- How do they compare with the TTM/D type system?

- Do they contain sufficient machinery to implement RA features on top?

User-defined types are no more or less than what JDBC (for SQL) and other common data representations like CSV and Excel spreadsheets support now.

It's not the TTM/D type system; it's the Java type system.

It's not a relational algebra. It's Java Streams with a likely future addition of developer-friendly JOIN, MINUS, INTERSECT, etc.

Quote from dandl on April 24, 2020, 1:30 amI understand the philosophy, but it seems to me that if you generate tuple classes with enough internal machinery, implementing the RA is trivial.

I agree, I don't use Join a lot in LINQ, but I do use Select heavily and Union/Minus quite a bit, and they needs more or less the same machinery. Isn't that much what you're doing?

Yes, and I don't doubt that adding a few mechanisms to make it more RA-like -- like an explicit JOIN, INTERSECT, MINUS, etc. -- will be useful and I intend to add them later. My initial goal is to be able to easily use Java Streams on SQL, CSV, you-name-it sources.

I'd be interested what your tuple classes look like, if you expect to be able to add RA features later.

Here's the tuple class generated by my Stage 1 example:

/** TestSelect tuple class version 0 */

public class TestSelect extends Tuple {

/** Version number */

public static final long serialVersionUID = 0;

/** Field */

public java.lang.Integer x;

/** Field */

public java.lang.Integer y;

/** Create string representation of this tuple. */

public String toString() {

return String.format("TestSelect {x = %s, y = %s}", this.x, this.y);

}

}

Ideally, it should have hashCode() and equals() definitions, but news of the new Java record implementation came along at the point where I was about to add them. I intend to use Java records, it will actually wind up being even simpler.

I don't really get it. Step 1 seems to infer attribute name and type from the result set of an SQL query. That won't work for CSV files, where you need type hints. And it doesn't address the issue of user-defined types in the database. Do you have user-defined value types?

Yes, it infers attribute name and type from the result of an SQL query, and it does it without any effort on my part because it uses JDBC to do it. JDBC maps database types to Java types. User-defined types are limited to whatever JDBC normally does with them.

CSV files have no type specification so I treat them as columns of strings. Converting the strings into something else is up to the user.

Step 2 bundles together a number of these to generate code, presumably a complete class definition for each tuple type, which I assume you

importinto the application.

Yes.

Presumably in Step 3

databaseassumes a standard factory method by which it can create a tuple type instance, given just the class name?

In Stage 3, it creates a tuple type instance given the tuple type class. In my example code, the tuple type class is TestSelect, so a new tuple instance is created like this:

var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();

The actual code that does the heavy lifting to convert a JDBC query resultset to form that can be converted to a stream of tuples is a bit more complex. It's this:

/**

* FunctionalInterface to define lambdas for processing each Tuple in a ResultSet.

*/

@FunctionalInterface

public interface TupleProcessor<T extends Tuple> {

public void process(T tupleType);

}

/**

* Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing.

*

* @param resultSet

* @param tupleType

* @param tupleProcessor

*

* @throws SecurityException - thrown if tuple constructor is not accessible

* @throws NoSuchMethodException - thrown if tuple constructor doesn't exist

* @throws InvocationTargetException - thrown if unable to instantiate tuple class

* @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument

* @throws IllegalAccessException - thrown if unable to instantiate tuple class

* @throws InstantiationException - thrown if unable to instantiate tuple class

* @throws SQLException - thrown if accessing ResultSet fails

* @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple

*/

public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException {

if (resultSet == null)

throw new IllegalArgumentException("resultSet may not be null");

if (tupleType == null)

throw new IllegalArgumentException("tupleType may not be null");

if (tupleProcessor == null)

throw new IllegalArgumentException("tupleProcessor may not be null");

var tupleConstructor = tupleType.getConstructor((Class<?>[])null);

var metadata = resultSet.getMetaData();

boolean optimised = false;

Field[] fields = null;

while (resultSet.next()) {

var tuple = tupleConstructor.newInstance((Object[])null);

if (optimised) {

for (int column = 1; column <= metadata.getColumnCount(); column++) {

var value = resultSet.getObject(column);

fields[column].set(tuple, value);

}

} else {

int columnCount = metadata.getColumnCount();

fields = new Field[columnCount + 1];

for (int column = 1; column <= columnCount; column++) {

var name = metadata.getColumnName(column);

var value = resultSet.getObject(column);

var field = tuple.getClass().getField(name);

field.set(tuple, value);

fields[column] = field;

}

optimised = true;

}

tupleProcessor.process(tuple);

}

}

I can read the Java code OK, but I can't easily infer the connections between the parts or the internal design decisions from the information given. And I can't see whether you can

?

The core questions seem to be:

- What are the key features of

- user-defined value types

- generated tuple types

- generated relation types (if you have them)?

- How do they compare with the TTM/D type system?

- Do they contain sufficient machinery to implement RA features on top?

User-defined types are no more or less than what JDBC (for SQL) and other common data representations like CSV and Excel spreadsheets support now.

It's not the TTM/D type system; it's the Java type system.

It's not a relational algebra. It's Java Streams with a likely future addition of developer-friendly JOIN, MINUS, INTERSECT, etc.

Quote from dandl on April 25, 2020, 6:28 amQuote from Dave Voorhis on April 24, 2020, 3:26 pmQuote from dandl on April 24, 2020, 1:30 amI understand the philosophy, but it seems to me that if you generate tuple classes with enough internal machinery, implementing the RA is trivial.

I agree, I don't use Join a lot in LINQ, but I do use Select heavily and Union/Minus quite a bit, and they needs more or less the same machinery. Isn't that much what you're doing?

Yes, and I don't doubt that adding a few mechanisms to make it more RA-like -- like an explicit JOIN, INTERSECT, MINUS, etc. -- will be useful and I intend to add them later. My initial goal is to be able to easily use Java Streams on SQL, CSV, you-name-it sources.

I'd be interested what your tuple classes look like, if you expect to be able to add RA features later.

Here's the tuple class generated by my Stage 1 example:

/** TestSelect tuple class version 0 */public class TestSelect extends Tuple {/** Version number */public static final long serialVersionUID = 0;/** Field */public java.lang.Integer x;/** Field */public java.lang.Integer y;/** Create string representation of this tuple. */public String toString() {return String.format("TestSelect {x = %s, y = %s}", this.x, this.y);}}/** TestSelect tuple class version 0 */ public class TestSelect extends Tuple { /** Version number */ public static final long serialVersionUID = 0; /** Field */ public java.lang.Integer x; /** Field */ public java.lang.Integer y; /** Create string representation of this tuple. */ public String toString() { return String.format("TestSelect {x = %s, y = %s}", this.x, this.y); } }/** TestSelect tuple class version 0 */ public class TestSelect extends Tuple { /** Version number */ public static final long serialVersionUID = 0; /** Field */ public java.lang.Integer x; /** Field */ public java.lang.Integer y; /** Create string representation of this tuple. */ public String toString() { return String.format("TestSelect {x = %s, y = %s}", this.x, this.y); } }Ideally, it should have hashCode() and equals() definitions, but news of the new Java record implementation came along at the point where I was about to add them. I intend to use Java records, it will actually wind up being even simpler.

I don't really get it. Step 1 seems to infer attribute name and type from the result set of an SQL query. That won't work for CSV files, where you need type hints. And it doesn't address the issue of user-defined types in the database. Do you have user-defined value types?

Yes, it infers attribute name and type from the result of an SQL query, and it does it without any effort on my part because it uses JDBC to do it. JDBC maps database types to Java types. User-defined types are limited to whatever JDBC normally does with them.

CSV files have no type specification so I treat them as columns of strings. Converting the strings into something else is up to the user.

Step 2 bundles together a number of these to generate code, presumably a complete class definition for each tuple type, which I assume you

importinto the application.Yes.

Presumably in Step 3

databaseassumes a standard factory method by which it can create a tuple type instance, given just the class name?In Stage 3, it creates a tuple type instance given the tuple type class. In my example code, the tuple type class is TestSelect, so a new tuple instance is created like this:

var tupleType = TestSelect.class;var tuple = tupleType.getConstructor().newInstance();var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();The actual code that does the heavy lifting to convert a JDBC query resultset to form that can be converted to a stream of tuples is a bit more complex. It's this:

/*** FunctionalInterface to define lambdas for processing each Tuple in a ResultSet.*/@FunctionalInterfacepublic interface TupleProcessor<T extends Tuple> {public void process(T tupleType);}/*** Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing.** @param resultSet* @param tupleType* @param tupleProcessor** @throws SecurityException - thrown if tuple constructor is not accessible* @throws NoSuchMethodException - thrown if tuple constructor doesn't exist* @throws InvocationTargetException - thrown if unable to instantiate tuple class* @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument* @throws IllegalAccessException - thrown if unable to instantiate tuple class* @throws InstantiationException - thrown if unable to instantiate tuple class* @throws SQLException - thrown if accessing ResultSet fails* @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple*/public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException {if (resultSet == null)throw new IllegalArgumentException("resultSet may not be null");if (tupleType == null)throw new IllegalArgumentException("tupleType may not be null");if (tupleProcessor == null)throw new IllegalArgumentException("tupleProcessor may not be null");var tupleConstructor = tupleType.getConstructor((Class<?>[])null);var metadata = resultSet.getMetaData();boolean optimised = false;Field[] fields = null;while (resultSet.next()) {var tuple = tupleConstructor.newInstance((Object[])null);if (optimised) {for (int column = 1; column <= metadata.getColumnCount(); column++) {var value = resultSet.getObject(column);fields[column].set(tuple, value);}} else {int columnCount = metadata.getColumnCount();fields = new Field[columnCount + 1];for (int column = 1; column <= columnCount; column++) {var name = metadata.getColumnName(column);var value = resultSet.getObject(column);var field = tuple.getClass().getField(name);field.set(tuple, value);fields[column] = field;}optimised = true;}tupleProcessor.process(tuple);}}/** * FunctionalInterface to define lambdas for processing each Tuple in a ResultSet. */ @FunctionalInterface public interface TupleProcessor<T extends Tuple> { public void process(T tupleType); } /** * Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing. * * @param resultSet * @param tupleType * @param tupleProcessor * * @throws SecurityException - thrown if tuple constructor is not accessible * @throws NoSuchMethodException - thrown if tuple constructor doesn't exist * @throws InvocationTargetException - thrown if unable to instantiate tuple class * @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument * @throws IllegalAccessException - thrown if unable to instantiate tuple class * @throws InstantiationException - thrown if unable to instantiate tuple class * @throws SQLException - thrown if accessing ResultSet fails * @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple */ public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException { if (resultSet == null) throw new IllegalArgumentException("resultSet may not be null"); if (tupleType == null) throw new IllegalArgumentException("tupleType may not be null"); if (tupleProcessor == null) throw new IllegalArgumentException("tupleProcessor may not be null"); var tupleConstructor = tupleType.getConstructor((Class<?>[])null); var metadata = resultSet.getMetaData(); boolean optimised = false; Field[] fields = null; while (resultSet.next()) { var tuple = tupleConstructor.newInstance((Object[])null); if (optimised) { for (int column = 1; column <= metadata.getColumnCount(); column++) { var value = resultSet.getObject(column); fields[column].set(tuple, value); } } else { int columnCount = metadata.getColumnCount(); fields = new Field[columnCount + 1]; for (int column = 1; column <= columnCount; column++) { var name = metadata.getColumnName(column); var value = resultSet.getObject(column); var field = tuple.getClass().getField(name); field.set(tuple, value); fields[column] = field; } optimised = true; } tupleProcessor.process(tuple); } }/** * FunctionalInterface to define lambdas for processing each Tuple in a ResultSet. */ @FunctionalInterface public interface TupleProcessor<T extends Tuple> { public void process(T tupleType); } /** * Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing. * * @param resultSet * @param tupleType * @param tupleProcessor * * @throws SecurityException - thrown if tuple constructor is not accessible * @throws NoSuchMethodException - thrown if tuple constructor doesn't exist * @throws InvocationTargetException - thrown if unable to instantiate tuple class * @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument * @throws IllegalAccessException - thrown if unable to instantiate tuple class * @throws InstantiationException - thrown if unable to instantiate tuple class * @throws SQLException - thrown if accessing ResultSet fails * @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple */ public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException { if (resultSet == null) throw new IllegalArgumentException("resultSet may not be null"); if (tupleType == null) throw new IllegalArgumentException("tupleType may not be null"); if (tupleProcessor == null) throw new IllegalArgumentException("tupleProcessor may not be null"); var tupleConstructor = tupleType.getConstructor((Class<?>[])null); var metadata = resultSet.getMetaData(); boolean optimised = false; Field[] fields = null; while (resultSet.next()) { var tuple = tupleConstructor.newInstance((Object[])null); if (optimised) { for (int column = 1; column <= metadata.getColumnCount(); column++) { var value = resultSet.getObject(column); fields[column].set(tuple, value); } } else { int columnCount = metadata.getColumnCount(); fields = new Field[columnCount + 1]; for (int column = 1; column <= columnCount; column++) { var name = metadata.getColumnName(column); var value = resultSet.getObject(column); var field = tuple.getClass().getField(name); field.set(tuple, value); fields[column] = field; } optimised = true; } tupleProcessor.process(tuple); } }I can read the Java code OK, but I can't easily infer the connections between the parts or the internal design decisions from the information given. And I can't see whether you can

?

The core questions seem to be:

- What are the key features of

- user-defined value types

- generated tuple types

- generated relation types (if you have them)?

- How do they compare with the TTM/D type system?

- Do they contain sufficient machinery to implement RA features on top?

User-defined types are no more or less than what JDBC (for SQL) and other common data representations like CSV and Excel spreadsheets support now.

It's not the TTM/D type system; it's the Java type system.

It's not a relational algebra. It's Java Streams with a likely future addition of developer-friendly JOIN, MINUS, INTERSECT, etc.

First: the Enlighter is not a happy thing. In the posted version the comments in your code are so pale I can barely read them. Is that a theme selection? In this quoted version, all the code is duplicated, first as paragraph text with no formatting and spell-check squiggles; then as an Enlighter block in monospace font, formatted, again with spell check squiggles. It's unusable for quoting.

Re your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

Quote from Dave Voorhis on April 24, 2020, 3:26 pmQuote from dandl on April 24, 2020, 1:30 amI understand the philosophy, but it seems to me that if you generate tuple classes with enough internal machinery, implementing the RA is trivial.

I agree, I don't use Join a lot in LINQ, but I do use Select heavily and Union/Minus quite a bit, and they needs more or less the same machinery. Isn't that much what you're doing?

Yes, and I don't doubt that adding a few mechanisms to make it more RA-like -- like an explicit JOIN, INTERSECT, MINUS, etc. -- will be useful and I intend to add them later. My initial goal is to be able to easily use Java Streams on SQL, CSV, you-name-it sources.

I'd be interested what your tuple classes look like, if you expect to be able to add RA features later.

Here's the tuple class generated by my Stage 1 example:

/** TestSelect tuple class version 0 */public class TestSelect extends Tuple {/** Version number */public static final long serialVersionUID = 0;/** Field */public java.lang.Integer x;/** Field */public java.lang.Integer y;/** Create string representation of this tuple. */public String toString() {return String.format("TestSelect {x = %s, y = %s}", this.x, this.y);}}/** TestSelect tuple class version 0 */ public class TestSelect extends Tuple { /** Version number */ public static final long serialVersionUID = 0; /** Field */ public java.lang.Integer x; /** Field */ public java.lang.Integer y; /** Create string representation of this tuple. */ public String toString() { return String.format("TestSelect {x = %s, y = %s}", this.x, this.y); } }/** TestSelect tuple class version 0 */ public class TestSelect extends Tuple { /** Version number */ public static final long serialVersionUID = 0; /** Field */ public java.lang.Integer x; /** Field */ public java.lang.Integer y; /** Create string representation of this tuple. */ public String toString() { return String.format("TestSelect {x = %s, y = %s}", this.x, this.y); } }Ideally, it should have hashCode() and equals() definitions, but news of the new Java record implementation came along at the point where I was about to add them. I intend to use Java records, it will actually wind up being even simpler.

I don't really get it. Step 1 seems to infer attribute name and type from the result set of an SQL query. That won't work for CSV files, where you need type hints. And it doesn't address the issue of user-defined types in the database. Do you have user-defined value types?

Yes, it infers attribute name and type from the result of an SQL query, and it does it without any effort on my part because it uses JDBC to do it. JDBC maps database types to Java types. User-defined types are limited to whatever JDBC normally does with them.

CSV files have no type specification so I treat them as columns of strings. Converting the strings into something else is up to the user.

Step 2 bundles together a number of these to generate code, presumably a complete class definition for each tuple type, which I assume you

importinto the application.Yes.

Presumably in Step 3

databaseassumes a standard factory method by which it can create a tuple type instance, given just the class name?In Stage 3, it creates a tuple type instance given the tuple type class. In my example code, the tuple type class is TestSelect, so a new tuple instance is created like this:

var tupleType = TestSelect.class;var tuple = tupleType.getConstructor().newInstance();var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();var tupleType = TestSelect.class; var tuple = tupleType.getConstructor().newInstance();The actual code that does the heavy lifting to convert a JDBC query resultset to form that can be converted to a stream of tuples is a bit more complex. It's this:

/*** FunctionalInterface to define lambdas for processing each Tuple in a ResultSet.*/@FunctionalInterfacepublic interface TupleProcessor<T extends Tuple> {public void process(T tupleType);}/*** Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing.** @param resultSet* @param tupleType* @param tupleProcessor** @throws SecurityException - thrown if tuple constructor is not accessible* @throws NoSuchMethodException - thrown if tuple constructor doesn't exist* @throws InvocationTargetException - thrown if unable to instantiate tuple class* @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument* @throws IllegalAccessException - thrown if unable to instantiate tuple class* @throws InstantiationException - thrown if unable to instantiate tuple class* @throws SQLException - thrown if accessing ResultSet fails* @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple*/public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException {if (resultSet == null)throw new IllegalArgumentException("resultSet may not be null");if (tupleType == null)throw new IllegalArgumentException("tupleType may not be null");if (tupleProcessor == null)throw new IllegalArgumentException("tupleProcessor may not be null");var tupleConstructor = tupleType.getConstructor((Class<?>[])null);var metadata = resultSet.getMetaData();boolean optimised = false;Field[] fields = null;while (resultSet.next()) {var tuple = tupleConstructor.newInstance((Object[])null);if (optimised) {for (int column = 1; column <= metadata.getColumnCount(); column++) {var value = resultSet.getObject(column);fields[column].set(tuple, value);}} else {int columnCount = metadata.getColumnCount();fields = new Field[columnCount + 1];for (int column = 1; column <= columnCount; column++) {var name = metadata.getColumnName(column);var value = resultSet.getObject(column);var field = tuple.getClass().getField(name);field.set(tuple, value);fields[column] = field;}optimised = true;}tupleProcessor.process(tuple);}}/** * FunctionalInterface to define lambdas for processing each Tuple in a ResultSet. */ @FunctionalInterface public interface TupleProcessor<T extends Tuple> { public void process(T tupleType); } /** * Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing. * * @param resultSet * @param tupleType * @param tupleProcessor * * @throws SecurityException - thrown if tuple constructor is not accessible * @throws NoSuchMethodException - thrown if tuple constructor doesn't exist * @throws InvocationTargetException - thrown if unable to instantiate tuple class * @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument * @throws IllegalAccessException - thrown if unable to instantiate tuple class * @throws InstantiationException - thrown if unable to instantiate tuple class * @throws SQLException - thrown if accessing ResultSet fails * @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple */ public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException { if (resultSet == null) throw new IllegalArgumentException("resultSet may not be null"); if (tupleType == null) throw new IllegalArgumentException("tupleType may not be null"); if (tupleProcessor == null) throw new IllegalArgumentException("tupleProcessor may not be null"); var tupleConstructor = tupleType.getConstructor((Class<?>[])null); var metadata = resultSet.getMetaData(); boolean optimised = false; Field[] fields = null; while (resultSet.next()) { var tuple = tupleConstructor.newInstance((Object[])null); if (optimised) { for (int column = 1; column <= metadata.getColumnCount(); column++) { var value = resultSet.getObject(column); fields[column].set(tuple, value); } } else { int columnCount = metadata.getColumnCount(); fields = new Field[columnCount + 1]; for (int column = 1; column <= columnCount; column++) { var name = metadata.getColumnName(column); var value = resultSet.getObject(column); var field = tuple.getClass().getField(name); field.set(tuple, value); fields[column] = field; } optimised = true; } tupleProcessor.process(tuple); } }/** * FunctionalInterface to define lambdas for processing each Tuple in a ResultSet. */ @FunctionalInterface public interface TupleProcessor<T extends Tuple> { public void process(T tupleType); } /** * Iterate a ResultSet, unmarshall each row into a Tuple, and pass it to a TupleProcessor for processing. * * @param resultSet * @param tupleType * @param tupleProcessor * * @throws SecurityException - thrown if tuple constructor is not accessible * @throws NoSuchMethodException - thrown if tuple constructor doesn't exist * @throws InvocationTargetException - thrown if unable to instantiate tuple class * @throws IllegalArgumentException - thrown if unable to instantiate tuple class, or if there is a type mismatch assigning tuple field values, or a null argument * @throws IllegalAccessException - thrown if unable to instantiate tuple class * @throws InstantiationException - thrown if unable to instantiate tuple class * @throws SQLException - thrown if accessing ResultSet fails * @throws NoSuchFieldException - thrown if a given ResultSet field name cannot be found in the Tuple */ public static <T extends Tuple> void process(ResultSet resultSet, Class<T> tupleType, TupleProcessor<T> tupleProcessor) throws NoSuchMethodException, SecurityException, SQLException, InstantiationException, IllegalAccessException, IllegalArgumentException, InvocationTargetException, NoSuchFieldException { if (resultSet == null) throw new IllegalArgumentException("resultSet may not be null"); if (tupleType == null) throw new IllegalArgumentException("tupleType may not be null"); if (tupleProcessor == null) throw new IllegalArgumentException("tupleProcessor may not be null"); var tupleConstructor = tupleType.getConstructor((Class<?>[])null); var metadata = resultSet.getMetaData(); boolean optimised = false; Field[] fields = null; while (resultSet.next()) { var tuple = tupleConstructor.newInstance((Object[])null); if (optimised) { for (int column = 1; column <= metadata.getColumnCount(); column++) { var value = resultSet.getObject(column); fields[column].set(tuple, value); } } else { int columnCount = metadata.getColumnCount(); fields = new Field[columnCount + 1]; for (int column = 1; column <= columnCount; column++) { var name = metadata.getColumnName(column); var value = resultSet.getObject(column); var field = tuple.getClass().getField(name); field.set(tuple, value); fields[column] = field; } optimised = true; } tupleProcessor.process(tuple); } }I can read the Java code OK, but I can't easily infer the connections between the parts or the internal design decisions from the information given. And I can't see whether you can

?

The core questions seem to be:

- What are the key features of

- user-defined value types

- generated tuple types

- generated relation types (if you have them)?

- How do they compare with the TTM/D type system?

- Do they contain sufficient machinery to implement RA features on top?

User-defined types are no more or less than what JDBC (for SQL) and other common data representations like CSV and Excel spreadsheets support now.

It's not the TTM/D type system; it's the Java type system.

It's not a relational algebra. It's Java Streams with a likely future addition of developer-friendly JOIN, MINUS, INTERSECT, etc.

First: the Enlighter is not a happy thing. In the posted version the comments in your code are so pale I can barely read them. Is that a theme selection? In this quoted version, all the code is duplicated, first as paragraph text with no formatting and spell-check squiggles; then as an Enlighter block in monospace font, formatted, again with spell check squiggles. It's unusable for quoting.

Re your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

Quote from Dave Voorhis on April 25, 2020, 9:56 amQuote from dandl on April 25, 2020, 6:28 amFirst: the Enlighter is not a happy thing. In the posted version the comments in your code are so pale I can barely read them. Is that a theme selection? In this quoted version, all the code is duplicated, first as paragraph text with no formatting and spell-check squiggles; then as an Enlighter block in monospace font, formatted, again with spell check squiggles. It's unusable for quoting.

It's the "new and improved" Enlighter 4, which is buggy. I've reported issues to the Enlighter developer, who suggested it was a forum software problem. I've reported it to the forum software developer -- along with a link to my Enlighter bug report -- and am waiting for a response.

Re your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so

class P extends Tuple {int x, int y}is a different type fromclass Q extends Tuple {int x, int y}.Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Quote from dandl on April 25, 2020, 6:28 amFirst: the Enlighter is not a happy thing. In the posted version the comments in your code are so pale I can barely read them. Is that a theme selection? In this quoted version, all the code is duplicated, first as paragraph text with no formatting and spell-check squiggles; then as an Enlighter block in monospace font, formatted, again with spell check squiggles. It's unusable for quoting.

It's the "new and improved" Enlighter 4, which is buggy. I've reported issues to the Enlighter developer, who suggested it was a forum software problem. I've reported it to the forum software developer -- along with a link to my Enlighter bug report -- and am waiting for a response.

Re your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so class P extends Tuple {int x, int y} is a different type from class Q extends Tuple {int x, int y}.

Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Quote from dandl on April 27, 2020, 1:27 amRe your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

Not convinced. The strength of LINQ (and perhaps streams) is the ability to switch mental model. Instead of traditional 'ordinary Java' with static collections, loops and updates you get to think of a flow of records through a pipe of operations. Instead of passing a collection to a function you get to think about applying a function by inserting it into the pipe. This is a long way from 'ordinary Java' as we've known it for the past 15 years or so. This prt of Java is since v8, or about 5 years or so. Still lots of older code around.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

OK.

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

No, I don't buy that. The RA of Codd and SQL competed in the early days with a perfectly plausible alternative 'network' model of data, and it looks like that's where you're headed. Without JOIN you have no way to combine disarate data sources, except by exploiting hard-wired navigation paths.

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

But that means you restrict yourself to JDBC data sources, and you depend on being able to write SQL, if available.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

I was under the impression that the class you showed was generated, and it had no constructor other than the default.

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so

class P extends Tuple {int x, int y}

class P extends Tuple {int x, int y}is a different type fromclass Q extends Tuple {int x, int y}

class Q extends Tuple {int x, int y}.So this is the key decision: POJO. Once you make that decision, you need one or more 'data factories' that can take a POJO and fill it with data somehow, and the natural choice is to use reflection. You can construct factories for JDBC, CSV, other data sources. If you want the RA, that's OK, build another factory. The JOIN factory takes two POJOs as input, one as output; figures out the join attributes using reflection; does compares and moves data from one to the other using reflection. Once you choose POJO, all else follows.

This was the choice I made for Andl.NET. It's precisely the choice I want to avoid in the post that started this thread.

Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Yes, I'm sure you can write a LINQ-style join in Java, if someone hasn't done it already. Been there, done that, got the T-shirt. Prediction: you aren't going to like it.

Re your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

Not convinced. The strength of LINQ (and perhaps streams) is the ability to switch mental model. Instead of traditional 'ordinary Java' with static collections, loops and updates you get to think of a flow of records through a pipe of operations. Instead of passing a collection to a function you get to think about applying a function by inserting it into the pipe. This is a long way from 'ordinary Java' as we've known it for the past 15 years or so. This prt of Java is since v8, or about 5 years or so. Still lots of older code around.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

OK.

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

No, I don't buy that. The RA of Codd and SQL competed in the early days with a perfectly plausible alternative 'network' model of data, and it looks like that's where you're headed. Without JOIN you have no way to combine disarate data sources, except by exploiting hard-wired navigation paths.

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

But that means you restrict yourself to JDBC data sources, and you depend on being able to write SQL, if available.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

I was under the impression that the class you showed was generated, and it had no constructor other than the default.

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so

class P extends Tuple {int x, int y}

class P extends Tuple {int x, int y}is a different type fromclass Q extends Tuple {int x, int y}

class Q extends Tuple {int x, int y}.

So this is the key decision: POJO. Once you make that decision, you need one or more 'data factories' that can take a POJO and fill it with data somehow, and the natural choice is to use reflection. You can construct factories for JDBC, CSV, other data sources. If you want the RA, that's OK, build another factory. The JOIN factory takes two POJOs as input, one as output; figures out the join attributes using reflection; does compares and moves data from one to the other using reflection. Once you choose POJO, all else follows.

This was the choice I made for Andl.NET. It's precisely the choice I want to avoid in the post that started this thread.

Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Yes, I'm sure you can write a LINQ-style join in Java, if someone hasn't done it already. Been there, done that, got the T-shirt. Prediction: you aren't going to like it.

Quote from Dave Voorhis on April 27, 2020, 8:06 amQuote from dandl on April 27, 2020, 1:27 amRe your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

Not convinced. The strength of LINQ (and perhaps streams) is the ability to switch mental model. Instead of traditional 'ordinary Java' with static collections, loops and updates you get to think of a flow of records through a pipe of operations. Instead of passing a collection to a function you get to think about applying a function by inserting it into the pipe. This is a long way from 'ordinary Java' as we've known it for the past 15 years or so. This prt of Java is since v8, or about 5 years or so. Still lots of older code around.

Not convinced of what?

I don't know about LINQ, but Java Streams has been out since 2014 (Java 8) and the latest release is Java 14. It's been considered mainstream, ordinary Java for a while.

Indeed there is lots of older code around, but it's regarded as legacy. I've no doubt there are some Java developers who avoid Streams -- much as there are C# developers who avoid LINQ (I've met them) -- but as usual, my goal is not to cater to legacy or backward-looking developers. Perhaps there's some inclination to regard LINQ as distinct from "mainstream" C# and avoid it for that or some other reason. I don't see the same with Java and Streams.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

OK.

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

No, I don't buy that. The RA of Codd and SQL competed in the early days with a perfectly plausible alternative 'network' model of data, and it looks like that's where you're headed. Without JOIN you have no way to combine disarate data sources, except by exploiting hard-wired navigation paths.

I've pointed out several times that I intend to augment the Streams map/fold/filter/collect model with JOIN, INTERSECT and MINUS. They're already do-able with Streams, just not very ergonomic. That's because -- as has been discussed already -- the usual assumption is that you're querying the emergent and preexisting object graph of a running application, not explicitly constructing an object graph (or dynamically inferring one via JOIN) from a collection of discrete data sources arbitrarily mapped into a running JVM.

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

But that means you restrict yourself to JDBC data sources, and you depend on being able to write SQL, if available.

For SQL data sources, I restrict myself to JDBC because SQL data sources in Java are all JDBC.

But I'm not restricted to SQL data sources. I'll be providing the same data sources that Rel currently supports. In addition to JDBC access to SQL, there's Microsoft Access databases, Excel spreadsheets, CSV files, maybe a not-before-released Hadoop connector, and the interfaces and base classes for user-developers to create their own.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

I was under the impression that the class you showed was generated, and it had no constructor other than the default.

The class I showed was generated, and it had no constructor other than the default.

But note that it was inherited from Tuple. You can create any classes you like inherited from Tuple with whatever constructor(s) you like, and they'll work the same way.

In other words, you aren't obligated to only use auto-generated Tuple-derived classes. You can use manually-authored ones too.

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so

class P extends Tuple {int x, int y}

class P extends Tuple {int x, int y}is a different type fromclass Q extends Tuple {int x, int y}

class Q extends Tuple {int x, int y}.So this is the key decision: POJO. Once you make that decision, you need one or more 'data factories' that can take a POJO and fill it with data somehow, and the natural choice is to use reflection. You can construct factories for JDBC, CSV, other data sources. If you want the RA, that's OK, build another factory. The JOIN factory takes two POJOs as input, one as output; figures out the join attributes using reflection; does compares and moves data from one to the other using reflection. Once you choose POJO, all else follows.

This was the choice I made for Andl.NET. It's precisely the choice I want to avoid in the post that started this thread.

Yes, I intentionally chose POJO because that's what makes use of Streams effective. Without POJO, no (reasonable use of) Streams, and thus once again creating something apart from the Java mainstream. My specific and explicit goal is to amplify mainstream Java and make it more data-oriented, and wherever possible do it with the existing Java core and a minimum of new frameworks, libraries, or machinery.

Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Yes, I'm sure you can write a LINQ-style join in Java, if someone hasn't done it already. Been there, done that, got the T-shirt. Prediction: you aren't going to like it.

It's essentially already in standard Java, in a somewhat roundabout way and not as ergonomic as I'd like.

But if I was forced to use only built-in Streams and Java standard library capability, that would be fine. I already use it extensively and it would simply be more of the same.

Quote from dandl on April 27, 2020, 1:27 amRe your code, that all makes sense. So

- closely tied to JDBC result sets, and no possibility of supporting user-defined types or TVA/RVA in the data, just plain old system scalars.

- no generated constructor, so relying on the database to generate classes from the result set, using reflection.

- no generated tuple type access by name, so future RA will need to access fields by reflection.

I'm not quite sure how this differs from a regular ORM? Or LINQ for SQL?

At a glance, it's possibly quite similar to LINQ for SQL, though it will support more than SQL.

ORMs are usually heavy frameworks, typically attempting to wrap SQL behind method calls and the like. I'm striving for a very lightweight minimum viable product; the simplest thing that could possibly work with the least deviation from ordinary Java.

Not convinced. The strength of LINQ (and perhaps streams) is the ability to switch mental model. Instead of traditional 'ordinary Java' with static collections, loops and updates you get to think of a flow of records through a pipe of operations. Instead of passing a collection to a function you get to think about applying a function by inserting it into the pipe. This is a long way from 'ordinary Java' as we've known it for the past 15 years or so. This prt of Java is since v8, or about 5 years or so. Still lots of older code around.

Not convinced of what?

I don't know about LINQ, but Java Streams has been out since 2014 (Java 8) and the latest release is Java 14. It's been considered mainstream, ordinary Java for a while.

Indeed there is lots of older code around, but it's regarded as legacy. I've no doubt there are some Java developers who avoid Streams -- much as there are C# developers who avoid LINQ (I've met them) -- but as usual, my goal is not to cater to legacy or backward-looking developers. Perhaps there's some inclination to regard LINQ as distinct from "mainstream" C# and avoid it for that or some other reason. I don't see the same with Java and Streams.

My goal is to take everything I like about using Rel for desktop data crunching, but unplug Tutorial D and plug in Java so I can benefit from the Java ecosystem. Thus, it's an attempt to do the least amount of work needed to give Java more fluid and agile data-processing capability, whilst providing an interactive environment to leverage that capability. Think of it as "Java for Data."

OK.

It's based on my hypothesis that the strength of the relational model is not the relational algebra itself, but the fact that there is some unifying, data-abstracting algebra of some sort. Which algebra doesn't really matter, as long as it meets some minimum level of intuitiveness and flexibility. In this case, the "algebra" I'm using is Java Streams' map/fold/filter, because that's what Java developers already know (see above re "least deviation from ordinary Java.")

No, I don't buy that. The RA of Codd and SQL competed in the early days with a perfectly plausible alternative 'network' model of data, and it looks like that's where you're headed. Without JOIN you have no way to combine disarate data sources, except by exploiting hard-wired navigation paths.

I've pointed out several times that I intend to augment the Streams map/fold/filter/collect model with JOIN, INTERSECT and MINUS. They're already do-able with Streams, just not very ergonomic. That's because -- as has been discussed already -- the usual assumption is that you're querying the emergent and preexisting object graph of a running application, not explicitly constructing an object graph (or dynamically inferring one via JOIN) from a collection of discrete data sources arbitrarily mapped into a running JVM.

The SQL portion is indeed closely tied to JDBC, but that's because SQL data sources in Java are inevitably closely tied to JDBC. I'm not concerned about user-defined types or TVAs/RVAs because JDBC/SQL doesn't support them. When I want to use rich user-defined types or TVAs/RVAs I've got Rel, where they work well.

But that means you restrict yourself to JDBC data sources, and you depend on being able to write SQL, if available.

For SQL data sources, I restrict myself to JDBC because SQL data sources in Java are all JDBC.

But I'm not restricted to SQL data sources. I'll be providing the same data sources that Rel currently supports. In addition to JDBC access to SQL, there's Microsoft Access databases, Excel spreadsheets, CSV files, maybe a not-before-released Hadoop connector, and the interfaces and base classes for user-developers to create their own.

Re "no generated constructor, so relying on the database to generate classes from the result set, using reflection."

Do you mean no authored constructor?

I was under the impression that the class you showed was generated, and it had no constructor other than the default.

The class I showed was generated, and it had no constructor other than the default.

But note that it was inherited from Tuple. You can create any classes you like inherited from Tuple with whatever constructor(s) you like, and they'll work the same way.

In other words, you aren't obligated to only use auto-generated Tuple-derived classes. You can use manually-authored ones too.

The tuple class can be generated, but developers can provide their own tuple classes with their own constructors -- anything inherited from the Tuple base class will work -- but they obey Java typing rules so

class P extends Tuple {int x, int y}

class P extends Tuple {int x, int y}is a different type fromclass Q extends Tuple {int x, int y}

class Q extends Tuple {int x, int y}.So this is the key decision: POJO. Once you make that decision, you need one or more 'data factories' that can take a POJO and fill it with data somehow, and the natural choice is to use reflection. You can construct factories for JDBC, CSV, other data sources. If you want the RA, that's OK, build another factory. The JOIN factory takes two POJOs as input, one as output; figures out the join attributes using reflection; does compares and moves data from one to the other using reflection. Once you choose POJO, all else follows.

This was the choice I made for Andl.NET. It's precisely the choice I want to avoid in the post that started this thread.

Yes, I intentionally chose POJO because that's what makes use of Streams effective. Without POJO, no (reasonable use of) Streams, and thus once again creating something apart from the Java mainstream. My specific and explicit goal is to amplify mainstream Java and make it more data-oriented, and wherever possible do it with the existing Java core and a minimum of new frameworks, libraries, or machinery.

Re "no generated tuple type access by name, so future RA will need to access fields by reflection."

No more so than any other Java Streams operators, which don't access fields by reflection. JOIN, for example, will need explicit reference to attribute sets to match on, but that's fine.

Yes, I'm sure you can write a LINQ-style join in Java, if someone hasn't done it already. Been there, done that, got the T-shirt. Prediction: you aren't going to like it.

It's essentially already in standard Java, in a somewhat roundabout way and not as ergonomic as I'd like.

But if I was forced to use only built-in Streams and Java standard library capability, that would be fine. I already use it extensively and it would simply be more of the same.

Quote from dandl on April 28, 2020, 1:15 amSorry, I wasn't trying to debate your choices, just to summarise them and get the priorities clear.

This is all a long way from the them of this thread, which was "Java as a host language for a D". The essence of D and a big chunk of TTM is the type system and related support for the RA, so my question is about augmenting/extending the type system in Java to achieve all or most of what D requires. Your response so far has been about reasons and methods not to. Instead, your fallback is Rel, and there are reasons why that isn't a viable choice for most of us.

Here is the proposition for Java-D as I see it.

- a Java language extension to provide all or most of the benefits of the D type system, including the RA

- able to support a wide variety of data sources for input and/or update (not constrained to JDBC), and/or a local storage engine

- probably in the form of a code generator with supporting templates and classes, and preferably avoiding reflection

- consistent with and usable by ordinary Java programming techniques, including streams (not constrained to POJOs).

If successful, working programs in Rel could be semi-mechanically translated into an equivalent program in Java-D (excluding database features for now).

Do you have a view on the feasibility of this proposition?

Sorry, I wasn't trying to debate your choices, just to summarise them and get the priorities clear.

This is all a long way from the them of this thread, which was "Java as a host language for a D". The essence of D and a big chunk of TTM is the type system and related support for the RA, so my question is about augmenting/extending the type system in Java to achieve all or most of what D requires. Your response so far has been about reasons and methods not to. Instead, your fallback is Rel, and there are reasons why that isn't a viable choice for most of us.

Here is the proposition for Java-D as I see it.

- a Java language extension to provide all or most of the benefits of the D type system, including the RA

- able to support a wide variety of data sources for input and/or update (not constrained to JDBC), and/or a local storage engine

- probably in the form of a code generator with supporting templates and classes, and preferably avoiding reflection

- consistent with and usable by ordinary Java programming techniques, including streams (not constrained to POJOs).

If successful, working programs in Rel could be semi-mechanically translated into an equivalent program in Java-D (excluding database features for now).

Do you have a view on the feasibility of this proposition?

Quote from Dave Voorhis on April 28, 2020, 7:37 amQuote from dandl on April 28, 2020, 1:15 amSorry, I wasn't trying to debate your choices, just to summarise them and get the priorities clear.

This is all a long way from the them of this thread, which was "Java as a host language for a D". The essence of D and a big chunk of TTM is the type system and related support for the RA, so my question is about augmenting/extending the type system in Java to achieve all or most of what D requires. Your response so far has been about reasons and methods not to. Instead, your fallback is Rel, and there are reasons why that isn't a viable choice for most of us.

Here is the proposition for Java-D as I see it.

- a Java language extension to provide all or most of the benefits of the D type system, including the RA

- able to support a wide variety of data sources for input and/or update (not constrained to JDBC), and/or a local storage engine

- probably in the form of a code generator with supporting templates and classes, and preferably avoiding reflection

- consistent with and usable by ordinary Java programming techniques, including streams (not constrained to POJOs).

If successful, working programs in Rel could be semi-mechanically translated into an equivalent program in Java-D (excluding database features for now).

Do you have a view on the feasibility of this proposition?

It would be relatively straightforward to turn Rel into it by altering the Rel grammar definition to syntactically resemble Java, along with machinery to load Java classes and invoke Java methods.

It would also be relatively straightforward to implement it by writing a transpiler from "Java-D" to Java, using the Rel DBMS core to provide a local storage engine and support for a wide variety of data sources. Though note that in the Java world, "a wide variety of data sources" mostly means "things accessible through JDBC" plus well-known libraries for CSV, Excel, Access, etc.

It wouldn't be Java. It would be a Java-like language that runs on the JVM, notionally similar to Kotlin and Clojure and Scala. It would be Tutorial D semantics with Java-ish syntax and JVM integration.

Quote from dandl on April 28, 2020, 1:15 amSorry, I wasn't trying to debate your choices, just to summarise them and get the priorities clear.

This is all a long way from the them of this thread, which was "Java as a host language for a D". The essence of D and a big chunk of TTM is the type system and related support for the RA, so my question is about augmenting/extending the type system in Java to achieve all or most of what D requires. Your response so far has been about reasons and methods not to. Instead, your fallback is Rel, and there are reasons why that isn't a viable choice for most of us.

Here is the proposition for Java-D as I see it.

- a Java language extension to provide all or most of the benefits of the D type system, including the RA

- able to support a wide variety of data sources for input and/or update (not constrained to JDBC), and/or a local storage engine

- probably in the form of a code generator with supporting templates and classes, and preferably avoiding reflection

- consistent with and usable by ordinary Java programming techniques, including streams (not constrained to POJOs).

If successful, working programs in Rel could be semi-mechanically translated into an equivalent program in Java-D (excluding database features for now).

Do you have a view on the feasibility of this proposition?

It would be relatively straightforward to turn Rel into it by altering the Rel grammar definition to syntactically resemble Java, along with machinery to load Java classes and invoke Java methods.

It would also be relatively straightforward to implement it by writing a transpiler from "Java-D" to Java, using the Rel DBMS core to provide a local storage engine and support for a wide variety of data sources. Though note that in the Java world, "a wide variety of data sources" mostly means "things accessible through JDBC" plus well-known libraries for CSV, Excel, Access, etc.

It wouldn't be Java. It would be a Java-like language that runs on the JVM, notionally similar to Kotlin and Clojure and Scala. It would be Tutorial D semantics with Java-ish syntax and JVM integration.

Quote from dandl on April 29, 2020, 8:29 amQuote from Dave Voorhis on April 28, 2020, 7:37 amQuote from dandl on April 28, 2020, 1:15 amSorry, I wasn't trying to debate your choices, just to summarise them and get the priorities clear.

This is all a long way from the them of this thread, which was "Java as a host language for a D". The essence of D and a big chunk of TTM is the type system and related support for the RA, so my question is about augmenting/extending the type system in Java to achieve all or most of what D requires. Your response so far has been about reasons and methods not to. Instead, your fallback is Rel, and there are reasons why that isn't a viable choice for most of us.

Here is the proposition for Java-D as I see it.

- a Java language extension to provide all or most of the benefits of the D type system, including the RA

- able to support a wide variety of data sources for input and/or update (not constrained to JDBC), and/or a local storage engine

- probably in the form of a code generator with supporting templates and classes, and preferably avoiding reflection

- consistent with and usable by ordinary Java programming techniques, including streams (not constrained to POJOs).

If successful, working programs in Rel could be semi-mechanically translated into an equivalent program in Java-D (excluding database features for now).

Do you have a view on the feasibility of this proposition?