'data language' / 'programming language'

Quote from AntC on February 4, 2021, 9:59 am(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language. Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

I have no problem with XML/YAML/JSON/whatever if I never have to see it. If it lives purely at the serialisation/deserialisation level, then that's fine -- I'm no more likely to encounter it than the raw TCP/IP packets travelling over my WiFi and Ethernet network.

The moment I'm expected to intelligently manipulate them, consider their structure -- either directly or indirectly -- give me a full-fledged programming language to do it.

Haskell applications that access (SQL/NoSQL) databases dynamically might use sophisticated type-safe data structures to build their requests; but then there's some grubby type-unsafe Stringy back-end to raw SQL or LINQ/etc with all the impedance-mismatched semantics you'd expect.

There are few things worse to my mind than having to edit thousands of lines of data language -- knowing that it could be represented in a few lines of programming language -- because some other developer decided to make things "easier" for me.

I guess some Haskellers might not encounter the data sublanguage -- if they're working on the 'front end' of a mature application, in which someone else has built the data access layer. Then that someone has made it "easier" by exactly writing a lot of interface code straddling both the data language and the host language.

Where is this nirvana of Haskellers coding database access natively?

(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language. Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

I have no problem with XML/YAML/JSON/whatever if I never have to see it. If it lives purely at the serialisation/deserialisation level, then that's fine -- I'm no more likely to encounter it than the raw TCP/IP packets travelling over my WiFi and Ethernet network.

The moment I'm expected to intelligently manipulate them, consider their structure -- either directly or indirectly -- give me a full-fledged programming language to do it.

Haskell applications that access (SQL/NoSQL) databases dynamically might use sophisticated type-safe data structures to build their requests; but then there's some grubby type-unsafe Stringy back-end to raw SQL or LINQ/etc with all the impedance-mismatched semantics you'd expect.

There are few things worse to my mind than having to edit thousands of lines of data language -- knowing that it could be represented in a few lines of programming language -- because some other developer decided to make things "easier" for me.

I guess some Haskellers might not encounter the data sublanguage -- if they're working on the 'front end' of a mature application, in which someone else has built the data access layer. Then that someone has made it "easier" by exactly writing a lot of interface code straddling both the data language and the host language.

Where is this nirvana of Haskellers coding database access natively?

Quote from tobega on February 4, 2021, 12:34 pmQuote from AntC on February 4, 2021, 9:59 am(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

Right, so the cold hard facts, like Supplier S# supplies Part P#, are quite uncontroversially described as data.

But what about the data about the data? "The amount of parts supplied must be non-negative". Fine, still facts/data. Unless it controls a validation, perhaps?

What about "The id of the screen that this data should be presented on" or "The size of the input box"? Still data? Or program? This is the slippery slope we're talking about where configuration data eventually becomes turing complete.

Although philosophically we could go deeper. Consider a TV-image, it's just pixels encoded in some way, just data. But when it hits the cathode ray tube or whatever the equivalent is these days, isn't it maybe a program that controls where the machine sprays its electrons?

When I take my cold hard facts about suppliers and parts and put it in an XML document, it's still data, right? Then I create another XML document with XSLT tags that will transform my suppliers and parts xml data into an html page. Obviously a program. Except that the XSLT itself doesn't do anything until the data starts pumping and controls which rules get activated. When saying "the data controls which rules get activated" there is a shift in perception that the XSLT is the machine and the XML document is the program that controls it. What about the output html? Is it just data specifying a page layout, or is it the program that draws the page?

Going in the other direction, from programming languages, LISP is for sure just lists of symbols where perception and context are the only things that decide whether it's data or program. Given the right context and interpretation, anything can be represented as a list of symbols.

Quote from AntC on February 4, 2021, 9:59 am(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

Right, so the cold hard facts, like Supplier S# supplies Part P#, are quite uncontroversially described as data.

But what about the data about the data? "The amount of parts supplied must be non-negative". Fine, still facts/data. Unless it controls a validation, perhaps?

What about "The id of the screen that this data should be presented on" or "The size of the input box"? Still data? Or program? This is the slippery slope we're talking about where configuration data eventually becomes turing complete.

Although philosophically we could go deeper. Consider a TV-image, it's just pixels encoded in some way, just data. But when it hits the cathode ray tube or whatever the equivalent is these days, isn't it maybe a program that controls where the machine sprays its electrons?

When I take my cold hard facts about suppliers and parts and put it in an XML document, it's still data, right? Then I create another XML document with XSLT tags that will transform my suppliers and parts xml data into an html page. Obviously a program. Except that the XSLT itself doesn't do anything until the data starts pumping and controls which rules get activated. When saying "the data controls which rules get activated" there is a shift in perception that the XSLT is the machine and the XML document is the program that controls it. What about the output html? Is it just data specifying a page layout, or is it the program that draws the page?

Going in the other direction, from programming languages, LISP is for sure just lists of symbols where perception and context are the only things that decide whether it's data or program. Given the right context and interpretation, anything can be represented as a list of symbols.

Quote from dandl on February 4, 2021, 1:38 pmYes, the old thread was getting a bit tired...

I find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, which the SQL data storage model does not. As you rightly point out, a distinction has to be made between the application data (suppliers and parts) and the model data (attribute names and validation tests). So there is a model (which describes the application) and a meta-model (which describes the model). Side-note: is there are meta-meta-model that describes itself? Tempting, but IMO better not.

Given a populated model (that conforms to the meta-model), a straightforward set of algorithms (code) can create the database, forms, reports, menus and so on that constitute the core application. Then we have the problem of snippets, to do that things the model can't handle, and connectivity to other apps and libraries. But it's useful on its own.

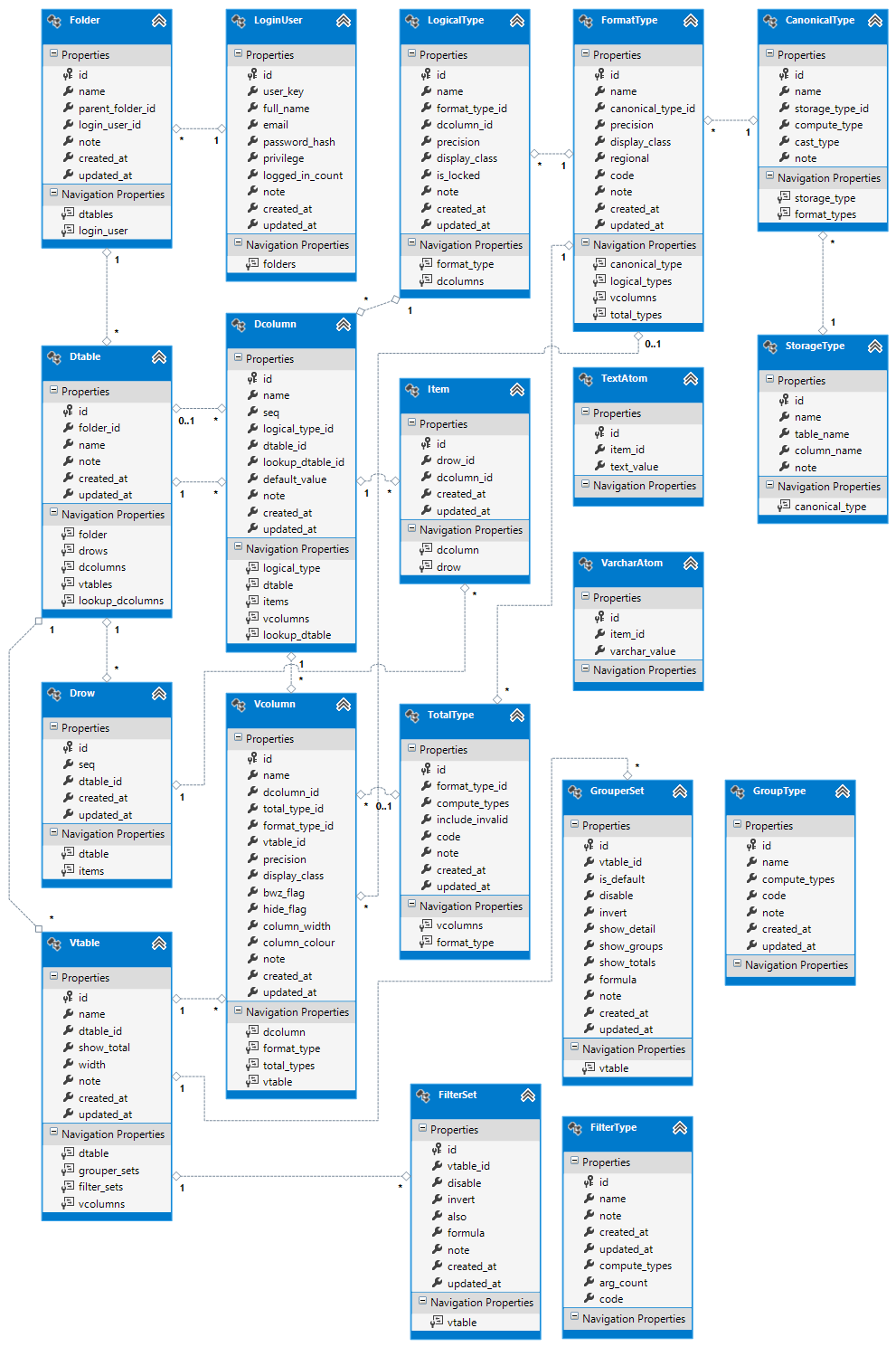

But it's not a trivial exercise. The meta-model probably runs to 20-odd tables in its own right. I rummaged through the detritus of the years and came up with the following, from about 10 years back.

Yes, the old thread was getting a bit tired...

I find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, which the SQL data storage model does not. As you rightly point out, a distinction has to be made between the application data (suppliers and parts) and the model data (attribute names and validation tests). So there is a model (which describes the application) and a meta-model (which describes the model). Side-note: is there are meta-meta-model that describes itself? Tempting, but IMO better not.

Given a populated model (that conforms to the meta-model), a straightforward set of algorithms (code) can create the database, forms, reports, menus and so on that constitute the core application. Then we have the problem of snippets, to do that things the model can't handle, and connectivity to other apps and libraries. But it's useful on its own.

But it's not a trivial exercise. The meta-model probably runs to 20-odd tables in its own right. I rummaged through the detritus of the years and came up with the following, from about 10 years back.

Uploaded files:

Quote from Dave Voorhis on February 4, 2021, 6:47 pmQuote from AntC on February 4, 2021, 9:59 am(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

LISP is notable for homoiconicity. Forth is notable for extensibility (and being weird, but beside the point.) Haskell is notable for functional purity and type rigour.

All highlight how overarchingly powerful programming can be. Diminish programming and replace it with static configuration or single-purpose declaration, and you lose power.

C, Fortran, COBOL and their modern successors -- C++, Java, C#, Python, Ruby, PHP, etc. -- are fine for what they are, but none are particularly powerful, expressive, elegant, or extensible. Smalltalk simultaneously shows how that category of language should be handled (pure OO) and shouldn't be handled (dynamically typed and -- typically -- an isolated sandbox, and not in a good way.)

I like Tutorial D, of course, but I don't ascribe any special elegance or power to Tutorial D as a language. I like Java and C#, too, and they're not special either.

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language.

It is, but only rarely and usually within the LISP world itself. It's been pointed out (mainly among LISP fans, admittedly) that S-expressions are notionally isomorphic to XML, but completely and inherently potentially programmable (because LISP) out-of-the-box. Instead, we got XML and various XML languages, and we got... XML. Ugh.

Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

True, and I'm not trying to suggest they're an ideal, only that programmability is fundamental and essential. Further non-programmable configuration/exchange/markup/whatever non-Turing-Complete languages -- and their inevitable impedance mismatches -- are not a step in the right direction.

I have no problem with XML/YAML/JSON/whatever if I never have to see it. If it lives purely at the serialisation/deserialisation level, then that's fine -- I'm no more likely to encounter it than the raw TCP/IP packets travelling over my WiFi and Ethernet network.

The moment I'm expected to intelligently manipulate them, consider their structure -- either directly or indirectly -- give me a full-fledged programming language to do it.

Haskell applications that access (SQL/NoSQL) databases dynamically might use sophisticated type-safe data structures to build their requests; but then there's some grubby type-unsafe Stringy back-end to raw SQL or LINQ/etc with all the impedance-mismatched semantics you'd expect.

There are few things worse to my mind than having to edit thousands of lines of data language -- knowing that it could be represented in a few lines of programming language -- because some other developer decided to make things "easier" for me.

I guess some Haskellers might not encounter the data sublanguage -- if they're working on the 'front end' of a mature application, in which someone else has built the data access layer. Then that someone has made it "easier" by exactly writing a lot of interface code straddling both the data language and the host language.

Where is this nirvana of Haskellers coding database access natively?

I doubt they are. Again, I mentioned Haskell as an example of programming goodness, not an ideal.

Quote from AntC on February 4, 2021, 9:59 am(starting a new thread from the 'mess of disinformation', because maybe I've taken this all out of context. So I'm leaving the back-story here just in case. And the darned quote tags have got messed up, hope I've repaired them ok ...)

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pmSo really this is the nub of the problem. We can all see the template/metadata/generation part of the solution solving 80% of the problem. What we can't see is a way to leverage that but leave room for the bits that don't quite fit.

Let's say an application that genuinely solves a reasonable customer problem is complex. Within the essential complexity of the solution there is routinely:

- 80% of regular complexity

- 20% of irregular complexity.

The regular complexity is seductively easy, because we can easily capture the regularities in tables, metadata, templates, code generation and so on. We think we're nearly done. We're not.

The irregular complexity is intractably hard. We can:

- Add features to our 'regularity' solution. That's hard work, and at the end of the day it comes to resemble code. Then it's just another language and most likely it won't get used.

- Find ways to plug in bits of custom code. Learning about all those special plug in places is hard, and mostly likely too hard.

- Go back to code first, but retain bits of the 'regularity' solution to reduce code volume. This is state of the art, this is you.

So the heart of the problem as I first stated it is that we just don't know how to integrate a regularity-based system to handle regular complexity with a code-based system to handle irregular complexity, other than method (c). And even if we know parts of the answer, and try to move up a level, it rapidly gets so (accidentally) complex we give up and go back to method (c).

Yes, (c) seems -- for all its limitations and flaws -- to invariably work better than the alternatives when we take all the factors into account.

That makes sense, because that's what code is for.

Attempts to replace code with non-code, but still do all the things that makes code powerful is, I think, doomed. That's because code is the most efficient, effective, powerful way to express the essential complexity of code. Yes, it's difficult and time-consuming and complex, but overall, the only approaches that would be less difficult, time-consuming, and complex would be everything else.

It's all code (or data). That's not the issue. It's regularity that makes the difference.

Pegasus is a PEG parser that emits C#. The generated code is striking in its regularity. You get to include snippets at various points, but the generated code is regular.

It's easy to generate UI code, or data access code, or reporting code from metadata that is regular. If you have a table of UI fields with a label, column name, display format, field validation, etc, etc in a perfectly regular structure you can generate regular code (or interpret that table directly). Tools like pgAdmin do exactly that: they rely on the regularity of the DDL. One complicated bit of code to handle one field, then just repeat for each field in the table. Done. There is code in the tool, but there is no code specific to the application: not needed.

But irregularity is the killer for this approach. Something really simple like: this field or that field can be blank, but not both. Or: this date must not be a Tuesday. Or: this number must show in red if it's more than this value. Like you said: must have for this job, don't care for that job, irregular.

So: currently the only way that consistently works is method (c). Method (a) is plain wrong, but there might be a way to do method (b) if and only if we could use metadata for the regular parts and code for the irregular parts (like Pegasus).

Do you know any products that have seriously tried to do that (and not drifted off into method a)?

Sorry, I must be missing something here. Pegasus is a parser generator. It appears to let you create languages.

Don't we already have languages that allow us to effectively create CRUD applications, like C# and Java, without the -- as we understand all too well -- undesirable tradeoffs that come from creating new languages?

Compiler-comiler tools like yacc, lex and Pegasus are not programming languages. In each case they construct a data model that conforms to a well-specified formal structure (LR, regex, PEG, etc) and then generate code to compare incoming source code to that model. The code is highly repetitive and not suitable for editing. As well, they insert snippets of user-written code to add extra checks and/or do useful work. It is perfectly possible to write lexers and parsers by hand, but you get better results using these tools. I know, I've done it both ways.

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

LISP is notable for homoiconicity. Forth is notable for extensibility (and being weird, but beside the point.) Haskell is notable for functional purity and type rigour.

All highlight how overarchingly powerful programming can be. Diminish programming and replace it with static configuration or single-purpose declaration, and you lose power.

C, Fortran, COBOL and their modern successors -- C++, Java, C#, Python, Ruby, PHP, etc. -- are fine for what they are, but none are particularly powerful, expressive, elegant, or extensible. Smalltalk simultaneously shows how that category of language should be handled (pure OO) and shouldn't be handled (dynamically typed and -- typically -- an isolated sandbox, and not in a good way.)

I like Tutorial D, of course, but I don't ascribe any special elegance or power to Tutorial D as a language. I like Java and C#, too, and they're not special either.

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language.

It is, but only rarely and usually within the LISP world itself. It's been pointed out (mainly among LISP fans, admittedly) that S-expressions are notionally isomorphic to XML, but completely and inherently potentially programmable (because LISP) out-of-the-box. Instead, we got XML and various XML languages, and we got... XML. Ugh.

Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

True, and I'm not trying to suggest they're an ideal, only that programmability is fundamental and essential. Further non-programmable configuration/exchange/markup/whatever non-Turing-Complete languages -- and their inevitable impedance mismatches -- are not a step in the right direction.

I have no problem with XML/YAML/JSON/whatever if I never have to see it. If it lives purely at the serialisation/deserialisation level, then that's fine -- I'm no more likely to encounter it than the raw TCP/IP packets travelling over my WiFi and Ethernet network.

The moment I'm expected to intelligently manipulate them, consider their structure -- either directly or indirectly -- give me a full-fledged programming language to do it.

Haskell applications that access (SQL/NoSQL) databases dynamically might use sophisticated type-safe data structures to build their requests; but then there's some grubby type-unsafe Stringy back-end to raw SQL or LINQ/etc with all the impedance-mismatched semantics you'd expect.

There are few things worse to my mind than having to edit thousands of lines of data language -- knowing that it could be represented in a few lines of programming language -- because some other developer decided to make things "easier" for me.

I guess some Haskellers might not encounter the data sublanguage -- if they're working on the 'front end' of a mature application, in which someone else has built the data access layer. Then that someone has made it "easier" by exactly writing a lot of interface code straddling both the data language and the host language.

Where is this nirvana of Haskellers coding database access natively?

I doubt they are. Again, I mentioned Haskell as an example of programming goodness, not an ideal.

Quote from dandl on February 4, 2021, 11:58 pmSo a simple question: if I gave you an application data model along the lines of the schema in my previous post, could you write the code to make it go live? Code generation, interpreter, virtual machine, whatever (but not by manually transcribing it into hand-written code).

If not, why not? What is missing (that can't easily be added)?

If so, what tools would you choose (especially for the UI)?

So a simple question: if I gave you an application data model along the lines of the schema in my previous post, could you write the code to make it go live? Code generation, interpreter, virtual machine, whatever (but not by manually transcribing it into hand-written code).

If not, why not? What is missing (that can't easily be added)?

If so, what tools would you choose (especially for the UI)?

Quote from AntC on February 5, 2021, 1:01 amQuote from Dave Voorhis on February 4, 2021, 6:47 pmQuote from AntC on February 4, 2021, 9:59 am

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pm

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

LISP is notable for homoiconicity. Forth is notable for extensibility (and being weird, but beside the point.) Haskell is notable for functional purity and type rigour.

All highlight how overarchingly powerful programming can be.

Hmm. Until I see a relational schema expressed in Haskell, I don't see enough power or the right sort of power. What could be simpler than set theory?

Diminish programming and replace it with static configuration or single-purpose declaration, and you lose power.

C, Fortran, COBOL and their modern successors -- C++, Java, C#, Python, Ruby, PHP, etc. -- are fine for what they are, but none are particularly powerful, expressive, elegant, or extensible. Smalltalk simultaneously shows how that category of language should be handled (pure OO) and shouldn't be handled (dynamically typed and -- typically -- an isolated sandbox, and not in a good way.)

I like Tutorial D, of course, but I don't ascribe any special elegance or power to Tutorial D as a language. I like Java and C#, too, and they're not special either.

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language.

It is, but only rarely and usually within the LISP world itself. It's been pointed out (mainly among LISP fans, admittedly) that S-expressions are notionally isomorphic to XML, but completely and inherently potentially programmable (because LISP) out-of-the-box. Instead, we got XML and various XML languages, and we got... XML. Ugh.

I don't count XML as a data language, hence why I'm not seeing it in LISP either. XML is capable of expressing structured data; if some particular file/stream contains structured data, that only emerges when you've got to the end and haven't found anything that doesn't conform with the structure. But then HTML is capable of expressing structured data; ditto.

Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

True, and I'm not trying to suggest they're an ideal, only that programmability is fundamental and essential. Further non-programmable configuration/exchange/markup/whatever non-Turing-Complete languages -- and their inevitable impedance mismatches -- are not a step in the right direction.

You haven't persuaded me I can do without a DDL -- by which I mean of course a Truly Relational ® DDL.

And the industry appears not to have an abstraction in any GP HLLs that can grok relational DDL and the content that conforms to it. We can rearrange the deckchairs, but an impedance mismatch seems inevitable.

... Again, I mentioned Haskell as an example of programming goodness, not an ideal.

What became of languages based on D.L.Childs' framework? Or perhaps we embed something Childs-like as a Domain-Specific Language inside a GP language? But still there's the impedance mismatch.

Quote from Dave Voorhis on February 4, 2021, 6:47 pmQuote from AntC on February 4, 2021, 9:59 am

Quote from Dave Voorhis on February 2, 2021, 10:11 amQuote from dandl on February 2, 2021, 5:49 amQuote from Dave Voorhis on February 1, 2021, 1:38 pmQuote from dandl on February 1, 2021, 1:31 pm

SQL is not a programming language. It is arguably a specification for a data structure that will be interpreted by the RDBMS query optimiser and query executor. It has an embedded expression evaluator, but the rest is just data.

Xml, JSON and Yaml are not programming languages. Each is a language for specifying structured data.

Exactly the problem.

We've arbitrarily divided languages into "not programming language" / "data language" vs "programming language",

"We"? I think the division is in the nature of the subject: data is persistent, and has persistent structure, because it represents state/some facts in some mini-world. The data only maps to the state/facts on condition its content fits the structure. The program is ephemeral; it doesn't 'know' anything about the state except by reading the data. Separating the data with its structure has the side-benefit that different tools/different programming languages can all access it.

when we should have listened to the LISP (and Forth, and later, Haskell and other)

I'm confused why highlight those languages? (and which others?) Why not include other GP HLLs already mentioned like Java, or classic C, Fortran, COBOL (which was reasonably data-aware)? Where does Tutorial D go? Or Ds in general?

LISP is notable for homoiconicity. Forth is notable for extensibility (and being weird, but beside the point.) Haskell is notable for functional purity and type rigour.

All highlight how overarchingly powerful programming can be.

Hmm. Until I see a relational schema expressed in Haskell, I don't see enough power or the right sort of power. What could be simpler than set theory?

Diminish programming and replace it with static configuration or single-purpose declaration, and you lose power.

C, Fortran, COBOL and their modern successors -- C++, Java, C#, Python, Ruby, PHP, etc. -- are fine for what they are, but none are particularly powerful, expressive, elegant, or extensible. Smalltalk simultaneously shows how that category of language should be handled (pure OO) and shouldn't be handled (dynamically typed and -- typically -- an isolated sandbox, and not in a good way.)

I like Tutorial D, of course, but I don't ascribe any special elegance or power to Tutorial D as a language. I like Java and C#, too, and they're not special either.

folks who went down this path before us and realised -- or knew to begin with -- that every non-programmable language is a lesser thing than an equivalent programming language. Thus, LISPers don't use (for example) a separate data or configuration language, they use LISP.

I wasn't aware LISP (or Scheme) is any sort of data language.

It is, but only rarely and usually within the LISP world itself. It's been pointed out (mainly among LISP fans, admittedly) that S-expressions are notionally isomorphic to XML, but completely and inherently potentially programmable (because LISP) out-of-the-box. Instead, we got XML and various XML languages, and we got... XML. Ugh.

I don't count XML as a data language, hence why I'm not seeing it in LISP either. XML is capable of expressing structured data; if some particular file/stream contains structured data, that only emerges when you've got to the end and haven't found anything that doesn't conform with the structure. But then HTML is capable of expressing structured data; ditto.

Haskell has extensive data-structuring declarations; but is just rubbish at accessing persistent/external data or transponding between its type-safe data structures and external media. Essentially everything has to be serialised as String. Type safety gone. Most applications' persistence layer is XML/JSON as a text file.

The two key features of The Relational Model: relations as sets; tuples as indexed sets (indexed by attribute names, which are metadata); and the key feature for data structuring viz. referential integrity -- just can't be represented in Haskell (nor LISP/Scheme/Forth AFAIA).

They extend the base language, rather than trying to generate it or replace it, which only creates a rigidity, inflexibility, and impedance mismatch between the host language and the sublanguage.

The Haskell base language is lambda calculus plus sum-of-product positionally-indexed data structures. The main resource for extending is powerful polymorphism and overloading. No amount of extending that gets you to those RM key features.

True, and I'm not trying to suggest they're an ideal, only that programmability is fundamental and essential. Further non-programmable configuration/exchange/markup/whatever non-Turing-Complete languages -- and their inevitable impedance mismatches -- are not a step in the right direction.

You haven't persuaded me I can do without a DDL -- by which I mean of course a Truly Relational ® DDL.

And the industry appears not to have an abstraction in any GP HLLs that can grok relational DDL and the content that conforms to it. We can rearrange the deckchairs, but an impedance mismatch seems inevitable.

... Again, I mentioned Haskell as an example of programming goodness, not an ideal.

What became of languages based on D.L.Childs' framework? Or perhaps we embed something Childs-like as a Domain-Specific Language inside a GP language? But still there's the impedance mismatch.

Quote from AntC on February 5, 2021, 1:23 amQuote from dandl on February 4, 2021, 1:38 pmI find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, ...

XML seems to think data is intrinsically hierarchical (Item-lines belong to Orders belong to Customers; so Product descriptions must belong to Item-lines; so Quantity-on-Hand must belong to Products ... must belong to Customers; but no, the same QoH 'belongs to' all Customers. Model fail.) Then simply no: a Relational data model can express referential integrity, but it has to be neutral as to the 'belongs to' relationships -- they're in the users' minds/different users want different forms of interaction.

If you want different users to interact differently over the same base data structure, you need to provide different views. Which have to be defined in a language/algebra; and be updatable-through. And you need the full power of Boolean logic + that algebra to express nitty-gritty validation/business rules. Still that doesn't express the dynamics of the business: to fulfill a customer's order you need enough QoH, and to get there you need to manufacture/order from Suppliers, and ..., and ..., back through the supply chain; and you need to prioritise demand from multiple Customers for limited/time-varying QoH.

Then an auto-gen'd CRUD-style application is good enough for 'static' maintenance. Not for Order-entry with Available-to-Promise feedback, nor for Enterprise Resource Planning.

Quote from dandl on February 4, 2021, 1:38 pmI find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, ...

XML seems to think data is intrinsically hierarchical (Item-lines belong to Orders belong to Customers; so Product descriptions must belong to Item-lines; so Quantity-on-Hand must belong to Products ... must belong to Customers; but no, the same QoH 'belongs to' all Customers. Model fail.) Then simply no: a Relational data model can express referential integrity, but it has to be neutral as to the 'belongs to' relationships -- they're in the users' minds/different users want different forms of interaction.

If you want different users to interact differently over the same base data structure, you need to provide different views. Which have to be defined in a language/algebra; and be updatable-through. And you need the full power of Boolean logic + that algebra to express nitty-gritty validation/business rules. Still that doesn't express the dynamics of the business: to fulfill a customer's order you need enough QoH, and to get there you need to manufacture/order from Suppliers, and ..., and ..., back through the supply chain; and you need to prioritise demand from multiple Customers for limited/time-varying QoH.

Then an auto-gen'd CRUD-style application is good enough for 'static' maintenance. Not for Order-entry with Available-to-Promise feedback, nor for Enterprise Resource Planning.

Quote from dandl on February 5, 2021, 8:18 amQuote from AntC on February 5, 2021, 1:23 amQuote from dandl on February 4, 2021, 1:38 pmI find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, ...

XML seems to think data is intrinsically hierarchical (Item-lines belong to Orders belong to Customers; so Product descriptions must belong to Item-lines; so Quantity-on-Hand must belong to Products ... must belong to Customers; but no, the same QoH 'belongs to' all Customers. Model fail.) Then simply no: a Relational data model can express referential integrity, but it has to be neutral as to the 'belongs to' relationships -- they're in the users' minds/different users want different forms of interaction.

You make a very good point. The traditional data modeller is very big on roles and relationships: Product 'is shipped by' Supplier, but the RM leaves it all in the eyes of the beholder. In the most favoured case we can make educated guesses about relatonships from attribute names and constraints; at the opposite extreme we may have absolutely no idea. Yes, TTM defines FK constraints but that's well short of what's needed. It doesn't make them queryable.

My view is that the application data model I'm talking about should crystallise relationships so that they can be made visible in the software. As per the ER diagram, we should explicitly specify the one-to-many relationship between supplier and product, and the field names involved. From this we can generate the SQL and constraints, and also the corresponding master-detail UI form. It's not in the RM or the SQL DDL, but it is in the application model.

If you want different users to interact differently over the same base data structure, you need to provide different views. Which have to be defined in a language/algebra; and be updatable-through. And you need the full power of Boolean logic + that algebra to express nitty-gritty validation/business rules. Still that doesn't express the dynamics of the business: to fulfill a customer's order you need enough QoH, and to get there you need to manufacture/order from Suppliers, and ..., and ..., back through the supply chain; and you need to prioritise demand from multiple Customers for limited/time-varying QoH.

Then an auto-gen'd CRUD-style application is good enough for 'static' maintenance. Not for Order-entry with Available-to-Promise feedback, nor for Enterprise Resource Planning.

In the model I showed there is a Data/View separation for exactly that purpose. This kind of requirement has a regularity to it that can be captured. But I do not propose to include business rules: they are algorithmic in nature and best expressed in code. I do propose 3 sub-languages:

- Regex for validation

- Format strings for display

- Expression evaluation, both for validation and for calculated fields.

Again, these are regular requirements.

Quote from AntC on February 5, 2021, 1:23 amQuote from dandl on February 4, 2021, 1:38 pmI find no difficulty in distinguishing between data and code. Data is values, code is algorithms, data + algorithms = programs. The two never get mixed up until they are found together in a program.

My proposition is that a suitable data model can describe a database application well enough to be useful, precisely because it specifies aspects of the visual appearance and user interaction, ...

XML seems to think data is intrinsically hierarchical (Item-lines belong to Orders belong to Customers; so Product descriptions must belong to Item-lines; so Quantity-on-Hand must belong to Products ... must belong to Customers; but no, the same QoH 'belongs to' all Customers. Model fail.) Then simply no: a Relational data model can express referential integrity, but it has to be neutral as to the 'belongs to' relationships -- they're in the users' minds/different users want different forms of interaction.

You make a very good point. The traditional data modeller is very big on roles and relationships: Product 'is shipped by' Supplier, but the RM leaves it all in the eyes of the beholder. In the most favoured case we can make educated guesses about relatonships from attribute names and constraints; at the opposite extreme we may have absolutely no idea. Yes, TTM defines FK constraints but that's well short of what's needed. It doesn't make them queryable.

My view is that the application data model I'm talking about should crystallise relationships so that they can be made visible in the software. As per the ER diagram, we should explicitly specify the one-to-many relationship between supplier and product, and the field names involved. From this we can generate the SQL and constraints, and also the corresponding master-detail UI form. It's not in the RM or the SQL DDL, but it is in the application model.